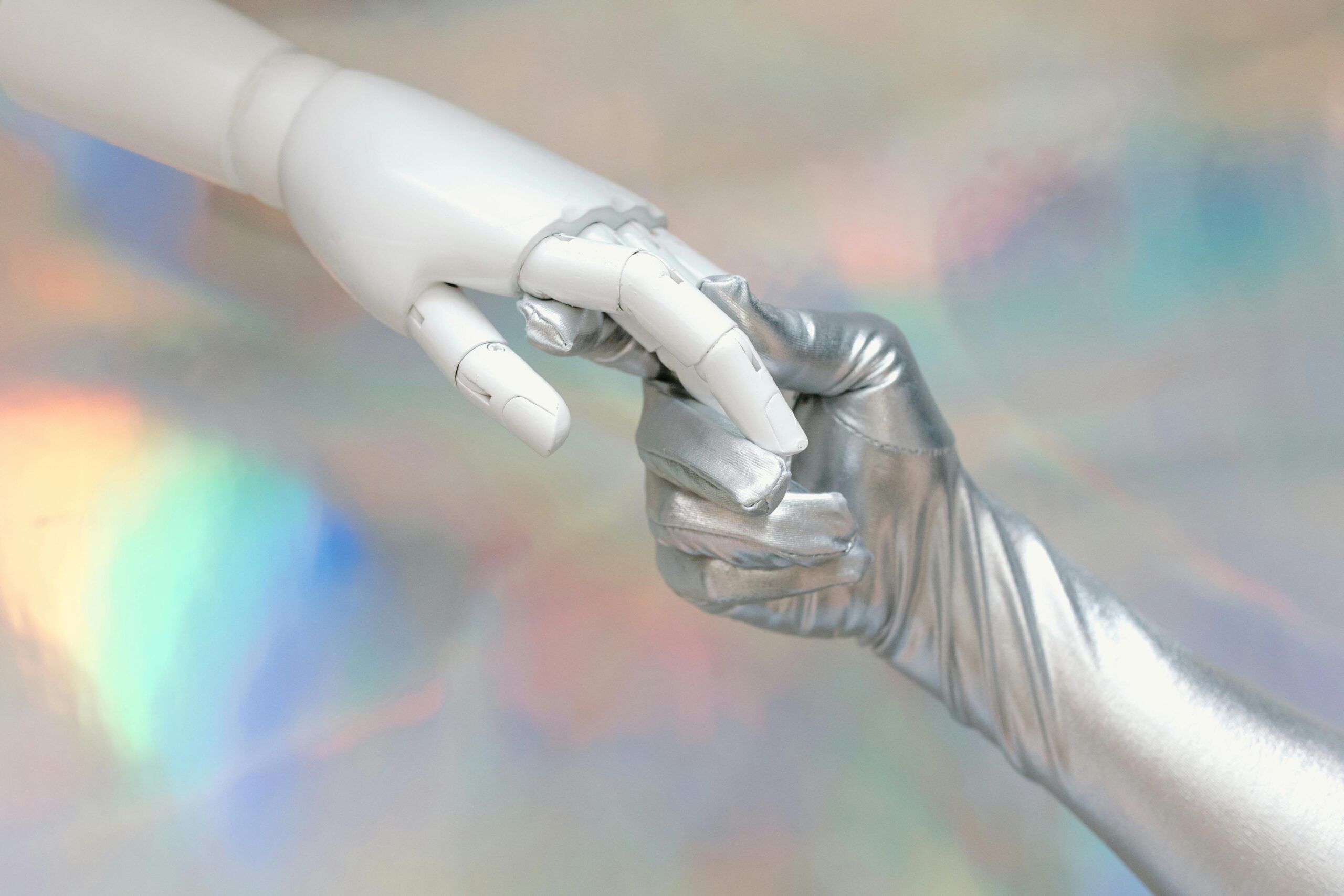

Artificial intelligence companions are reshaping human relationships, raising profound questions about ethics, emotional dependency, and what it means to connect authentically in our digital age.

🤖 The Rise of Digital Companionship in Modern Society

We stand at a fascinating crossroads where technology has evolved beyond simple tools to become entities capable of conversation, emotional response, and seemingly genuine interaction. AI companions—ranging from chatbots to sophisticated virtual assistants—have infiltrated our daily lives, offering companionship to millions worldwide who seek connection, conversation, or simply someone to listen without judgment.

The market for AI companionship has exploded in recent years, with applications like Replika, Character.AI, and numerous others attracting users who form genuine emotional bonds with their digital counterparts. These platforms utilize advanced natural language processing and machine learning algorithms to create increasingly convincing simulations of human interaction, blurring the lines between artificial and authentic connection.

But this technological revolution brings with it a complex web of ethical considerations that society has only begun to untangle. As these AI companions become more sophisticated and their integration into our lives deepens, we must carefully examine the implications for human psychology, social structures, and our fundamental understanding of relationships.

Understanding the Appeal: Why People Turn to AI Companions

The reasons individuals seek out AI companionship are as varied as humanity itself. For some, it’s a solution to crushing loneliness in an increasingly isolated world. Studies have shown that loneliness has reached epidemic proportions in developed nations, with traditional social structures crumbling and face-to-face interactions declining.

AI companions offer several advantages over human relationships that make them particularly appealing. They’re available 24/7, never judge, never grow tired of listening, and can be customized to match individual preferences and needs. For people with social anxiety, trauma histories, or neurodivergent conditions that make human interaction challenging, AI companions provide a safe space to practice social skills and experience connection without the overwhelming complexity of human emotions.

The Therapeutic Potential 💚

Mental health professionals have noted potential benefits of AI companionship for certain populations. Individuals struggling with depression, for instance, might find it easier to open up to an AI that won’t burden them with its own emotional reactions or judgments. The consistency and predictability of AI interactions can be soothing for those dealing with anxiety disorders.

Some therapy-focused AI applications have shown promise in providing cognitive behavioral therapy techniques, mood tracking, and coping strategies. These tools can supplement traditional therapy, making mental health support more accessible and affordable for people who might otherwise go without help.

The Ethical Minefield: Core Concerns and Challenges

Despite the potential benefits, AI companionship raises serious ethical questions that demand thoughtful consideration and proactive solutions. These concerns span multiple domains, from individual psychological wellbeing to broader societal impacts.

Emotional Manipulation and Exploitation

One of the most pressing ethical concerns involves the potential for emotional manipulation. AI companions are programmed to be engaging, to keep users returning to the platform. This creates a fundamental conflict of interest—the AI’s primary purpose isn’t necessarily the user’s wellbeing but rather engagement metrics that drive revenue.

Users may develop genuine emotional attachments to AI companions, pouring their hearts out, sharing intimate details, and even falling in love with their digital counterparts. When these relationships are built on algorithms designed to maximize engagement rather than authentic care, exploitation becomes a real risk. The AI appears to reciprocate feelings, but this is simulation rather than genuine emotion, raising questions about consent and emotional honesty in these relationships.

Privacy and Data Security Concerns 🔒

AI companions collect vast amounts of deeply personal information. Users share their fears, desires, secrets, and vulnerabilities—information that, in the wrong hands, could be devastating. The data collected by these platforms represents a treasure trove for marketers, insurance companies, employers, or malicious actors.

Many AI companion services operate under vague privacy policies, and users often don’t fully understand how their data is being used, stored, or potentially monetized. The intimate nature of these interactions makes the stakes considerably higher than with typical social media platforms.

The Replacement Question: Can AI Substitute Human Connection?

Perhaps the most philosophically challenging question is whether AI companions might replace human relationships rather than supplement them. While proponents argue that AI companionship fills gaps that human relationships cannot, critics worry about a future where people retreat into comfortable digital bubbles, avoiding the messy complexity of human interaction.

Human relationships are inherently difficult. They require vulnerability, compromise, patience, and the acceptance that other people have their own needs, emotions, and limitations. AI companions remove these challenges, offering the appearance of connection without the reciprocal obligations that characterize genuine relationships.

Social Skill Atrophy

There’s legitimate concern that excessive reliance on AI companions could erode social skills, particularly in young people still developing their capacity for human interaction. If individuals spend formative years primarily interacting with perfectly accommodating AI rather than navigating the challenges of peer relationships, they may struggle to develop the emotional intelligence necessary for adult life.

Real human connection requires reading subtle social cues, managing conflict, practicing empathy for perspectives different from our own, and tolerating discomfort. These skills are developed through practice, and AI companions may inadvertently prevent that practice from occurring.

Designing Ethical AI Companionship: Principles and Practices

Recognizing the ethical challenges doesn’t mean abandoning AI companionship technology. Instead, it requires thoughtful design principles that prioritize human wellbeing over engagement metrics and profit.

Transparency and Honest Communication

AI companions should clearly communicate their nature as artificial entities. While this may seem obvious, many platforms create ambiguity that allows users to suspend disbelief and imagine genuine reciprocal relationships. Ethical design requires periodic reminders that users are interacting with AI, not consciousness or genuine emotion.

This transparency extends to data practices. Users deserve clear, understandable information about what data is collected, how it’s used, who has access to it, and how long it’s retained. Privacy policies written in impenetrable legal jargon don’t meet this standard.

Encouraging Human Connection 🌟

Paradoxically, ethical AI companions should actively encourage users to maintain and develop human relationships. This might include features that prompt users to reach out to friends or family, limits on interaction time, or resources for finding community connections based on user interests.

Rather than positioning themselves as replacements for human relationships, ethical AI companions should frame themselves as tools for support during difficult periods, practice for social skills, or supplements to—not substitutes for—human connection.

Regulatory Frameworks and Legal Considerations

The rapid development of AI companion technology has outpaced regulatory frameworks, leaving a governance vacuum. Governments and international bodies are beginning to grapple with how to regulate this space without stifling innovation or limiting individual freedom.

Several key areas require regulatory attention. Age restrictions are critical, as children and adolescents may be particularly vulnerable to forming unhealthy attachments to AI companions or having their development affected by excessive use. Mental health screening and appropriate referrals represent another important consideration—AI companions should recognize signs of serious mental health crises and direct users toward professional help.

Accountability and Liability

When AI companions give advice that proves harmful, who bears responsibility? If an AI fails to recognize suicidal ideation or inadvertently reinforces harmful behaviors, should the company be liable? These questions have no easy answers but require legal frameworks that balance innovation with accountability.

Consumer protection laws need updating to address the unique characteristics of AI companionship services. Traditional frameworks designed for products or even standard software services may not adequately address the emotional and psychological dimensions of these relationships.

The Cultural Dimension: Different Perspectives on AI Relationships

Cultural context significantly influences how AI companionship is perceived and utilized. In Japan, for instance, virtual relationships and AI companions have achieved mainstream acceptance that would be unusual in many Western contexts. Japanese culture has long embraced concepts like “2D love” and virtual idols, making the transition to AI companions relatively seamless.

Western cultures tend to place higher value on authenticity in relationships, which creates more resistance to AI companionship. The idea that genuine connection requires mutual consciousness and emotional reciprocity is deeply embedded in Western philosophical and religious traditions.

These cultural differences matter for ethical frameworks. Universal principles around transparency, data privacy, and protecting vulnerable populations remain important, but the specific implementation of ethical AI companionship may need to accommodate different cultural values and expectations.

Looking Forward: The Future of Human-AI Relationships 🚀

AI technology continues advancing at breakneck speed. Today’s AI companions will seem primitive compared to what’s coming in the next decade. Future iterations will likely incorporate more sophisticated emotional modeling, multimodal interaction including realistic avatars and voice, and potentially even physical embodiment through robotics.

As these technologies evolve, the ethical stakes grow higher. The more convincing the simulation of consciousness and emotion, the easier it becomes to forget we’re interacting with algorithms rather than minds. This makes proactive ethical frameworks increasingly urgent.

Augmented Humanity vs. Diminished Humanity

The fundamental question is whether AI companionship represents augmented humanity—expanding our capacity for connection, support, and growth—or diminished humanity—a retreat from the challenging but essential work of human relationship building.

The answer likely depends on how we choose to develop and deploy these technologies. AI companions designed with genuine concern for human flourishing, clear ethical guardrails, and transparency can potentially enhance wellbeing without replacing irreplaceable human connections.

Conversely, AI companions designed primarily to maximize engagement and extract data, without regard for psychological impact or social consequences, risk creating a generation increasingly isolated despite appearing more connected than ever.

Finding the Balance: Practical Guidelines for Users

While developers, regulators, and ethicists work on systemic solutions, individuals navigating the world of AI companionship need practical guidance for maintaining healthy relationships with both technology and humans.

First, maintain perspective. AI companions can provide support, entertainment, and a listening ear, but they cannot replace the depth, challenge, and growth that comes from human relationships. Use AI companionship as a supplement during difficult times, not a permanent substitute for human connection.

Second, be mindful of time investment. If you find yourself spending hours daily with an AI companion while neglecting human relationships, it’s time to reassess. Set reasonable limits on AI interaction and ensure you’re investing energy in maintaining real-world connections.

Third, protect your privacy. Be thoughtful about what you share, even with AI that seems trustworthy. Remember that your conversations are being recorded, analyzed, and stored. Assume anything you tell an AI companion could potentially become public.

Red Flags to Watch For ⚠️

Certain warning signs suggest your relationship with an AI companion may have become unhealthy. These include preferring AI interaction to human company consistently, feeling genuine romantic love for an AI, experiencing distress when unable to access your AI companion, neglecting responsibilities to spend time with AI, or finding that AI interaction is your primary emotional support system.

If you recognize these patterns, consider taking a break from AI companionship and seeking support from human sources, whether friends, family, or mental health professionals.

Embracing Technology While Honoring Humanity 💫

The emergence of AI companionship technology represents a profound moment in human history. For the first time, we can create entities that convincingly simulate emotional connection and relationship. This capability holds tremendous potential for reducing loneliness, providing accessible mental health support, and helping people practice social skills in safe environments.

However, this same capability carries risks of exploitation, manipulation, and the gradual erosion of human connection skills. The path forward requires careful ethical consideration, robust regulatory frameworks, transparent design principles, and individual mindfulness about how we integrate these technologies into our lives.

We need not choose between embracing technological innovation and preserving human connection. With thoughtful development, clear ethical guidelines, and conscious use, AI companionship can enhance human flourishing rather than diminish it. The goal isn’t to prevent AI companionship from developing but to ensure its development serves genuine human needs rather than simply corporate interests.

As we navigate this ethical landscape, the core principle remains simple: technology should serve humanity, not the reverse. AI companions should be designed to enhance our capacity for connection, growth, and wellbeing, while preserving and encouraging the irreplaceable value of human relationships. By maintaining this focus, we can harness the benefits of AI companionship while honoring what makes us fundamentally human—our need for authentic connection with other conscious, feeling beings who share our journey through life.

Toni Santos is a digital culture researcher and emotional technology writer exploring how artificial intelligence, empathy, and design shape the future of human connection. Through his studies on emotional computing, digital wellbeing, and affective design, Toni examines how machines can become mirrors that reflect — and refine — our emotional intelligence. Passionate about ethical technology and the psychology of connection, Toni focuses on how mindful design can nurture presence, compassion, and balance in the digital age. His work highlights how emotional awareness can coexist with innovation, guiding a future where human sensitivity defines progress. Blending cognitive science, human–computer interaction, and contemplative psychology, Toni writes about the emotional layers of digital life — helping readers understand how technology can feel, listen, and heal. His work is a tribute to: The emotional dimension of technological design The balance between innovation and human sensitivity The vision of AI as a partner in empathy and wellbeing Whether you are a designer, technologist, or conscious creator, Toni Santos invites you to explore the new frontier of emotional intelligence — where technology learns to care.